Take a moment and count something. The number of tabs open on your browser. The steps from your desk to the coffee machine. The days left until the weekend. It’s an act so effortless, so ingrained, that it feels less like a thought and more like a reflex. We are, by our very nature, a counting species. But have you ever stopped to wonder where this obsession began? Not with the grand equations of physicists or the complex ledgers of accountants, but with the humble, everyday act of tallying things up?

This is the tale of an adventure. A silent, millennia-long migration from the tangible to the abstract, from the physical weight of a pebble inside the palm to the airy glow of a pixel on a screen. It’s a saga of human ingenuity, born not in lofty universities but inside the mud of riverbanks, the dust of marketplaces, and the quiet corners of domestic existence. This is the adventure of regular counting—the evolution of the numerical idea that essentially formed who we are.

We often frame the history of mathematics as a parade of geniuses: Pythagoras, Newton, Euler. But beneath their monumental contributions flows a deeper, older current: the organic development of numeracy by ordinary people solving ordinary problems. This is the story of how we learned to quantify our world, and in doing so, began to understand it.

The Dawn of Quantity: Notches, Stones, and the Human Body

Before numbers, there was quantity. Before words, there were gestures. The very first counting systems weren’t written or spoken; they were tactile enumeration and embodied reckoning.

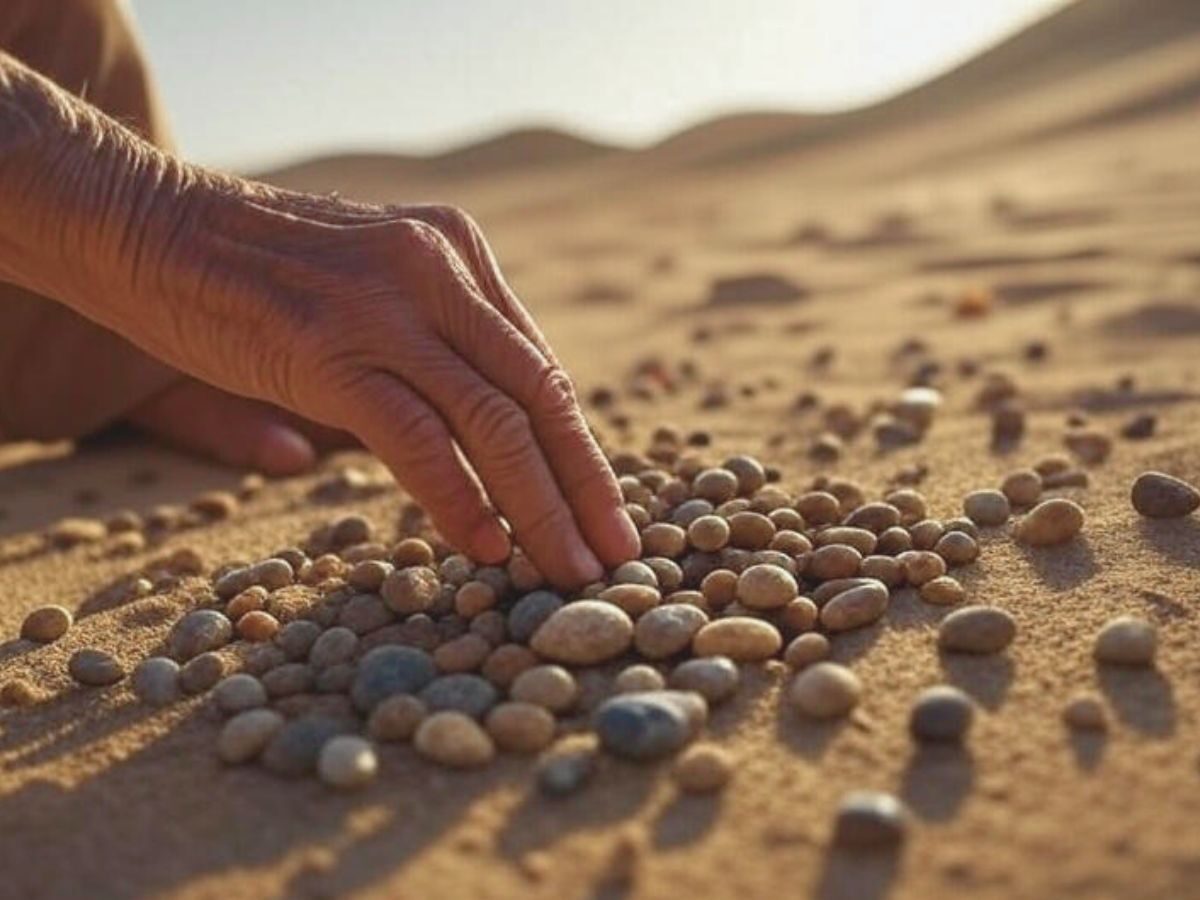

Imagine a Neolithic herder as the sun sets. They need to ensure all their sheep have returned from the pasture. They haven’t conceived of the number “twenty-seven.” Instead, they use a one-to-one correspondence. As each sheep enters the pen, they drops a pebble into a pouch. A stone for a sheep. The pile of pebbes is the flock, in a tangible, externalized form. This is the power of the concrete counting token, and it was a revolutionary cognitive leap. It allowed our ancestors to offload memory onto the physical world, a primordial form of data storage.

Similarly, the enduring tally mark, a single line for a single unit, is a form of permanent concrete quantification. The Ishango bone, a baboon fibula discovered in the Congo dating back over 20,000 years, is etched with a series of notches grouped in patterns. Was it a lunar calendar? A record of the game hunted? We may never know for sure, but its message is clear: a human was keeping track. This act of primitive tallying is the absolute bedrock of mathematics.

Perhaps the most intimate counting system was the human body itself. Body tally systems were used across countless ancient cultures. You could count on your fingers, of course—a system so natural we still teach it to toddlers. But why stop there? The New Guinea highlanders, among others, developed elaborate systems where each finger, then each wrist, elbow, shoulder, and headland (like the eye, nose, and mouth) represented a specific number, allowing them to count into the tens on their own physique. This was somatic arithmetic—using the body as an abacus. It transformed the self into a walking, talking calculator.

The Great Abstraction: From “Three Stones” to “Threeness”

The pivotal moment in our journey, the true spark of mathematical genius, was the move from concrete counting to abstract number. This was the moment we stopped thinking about “three sheep” or “three stones” and grasped the concept of “three” itself.

This is a fiendishly difficult cognitive leap. For a child, it’s the difference between pointing to two apples and two giraffes and understanding that the “two-ness” they share is identical, despite the vast difference in the objects. This concept of number sense abstraction is what separates numeracy from mere tallying.

Language was the key that unlocked this door. The development of number words created a mental placeholder for these abstract concepts. You could now talk about “three” without needing a physical referent. This allowed for comparative quantification—is my three more or less than your four?—and eventually, calculation.

Different cultures abstracted at different speeds and in different ways. The ancient Mesopotamians, with their cuneiform script, used a base-60 system (sexagesimal) for advanced astronomy and commerce, a legacy that survives in our 60-minute hours and 360-degree circles. The Maya, brilliant astronomers, used a base-20 (vigesimal) system that included the concept of zero as a placeholder. The Romans, pragmatic and administrative, developed a numeral system that was excellent for recording amounts (think carved into stone monuments) but notoriously clumsy for actually performing calculations.

This era was defined by material substrate counting. The medium was inextricably linked to the message. Numbers were carved into clay, etched onto wax, painted onto parchment, or represented by piles of tokens. The cognitive load of early arithmetic was immense. Try multiplying DCLXVII by XLIV using only Roman numerals. It’s a nightmare that required abacuses and counting boards to solve, tools that acted as external processors for a difficult mental task.

The Paper Revolution: Standardizing the Everyday

If the abstraction of number was the spark, the invention and spread of cheap paper and the Hindu-Arabic numeral system was the explosion that lit the world.

The Hindu-Arabic numerals (0,1,2,3…) we use today originated in India, were adopted by Arab scholars, and finally spread to Europe around the 12th century. Their advantages were profound:

- Place Value: A “5” in the units place is different from a “5” in the tens place. This simple, powerful idea of positional notation efficiency made complex calculations vastly simpler.

- The Number Zero: The invention of zero as a number, not just a placeholder, was a philosophical and practical earthquake. It completed the place-value system and provided the foundation for higher mathematics.

- Symbolic Calculation Fluency: Written arithmetic—long multiplication, division, algebra—became possible. You could work through a problem on paper, a process of manual computation and tracking.

This combination of cheap paper and an efficient numeral system democratized numeracy. It wasn’t just for priests and royal accountants anymore. Merchants could balance their ledgers. Farmers could calculate yields. Tailors could measure cloth. The domestic mathematics of running a household became more precise. Recipes, budgets, and schedules could be written down and standardized. This was the true birth of everyday calculation as we know it—a standardized, portable system of numbers that integrated seamlessly into the fabric of daily life for millions.

The Mechanical and The Electric: Offloading Mental Labor

For centuries, paper was the pinnacle. But the human desire for speed and accuracy, particularly in commerce, engineering, and science, pushed for new tools. The next step in our journey was about automating the process of calculation itself.

The 17th century saw the development of sophisticated mechanical calculators, like Blaise Pascal’s Pascaline and Gottfried Wilhelm Leibniz’s stepped reckoner. These were marvels of gears and levers, capable of addition, subtraction, multiplication, and division. They represented a shift from manual computation tracking to automated arithmetic resolution. The mental labor of calculation was being physically offloaded onto a machine.

The slide rule, an analog computer based on logarithms, became the essential tool for scientists and engineers for over three centuries. It required skill and understanding to operate, representing a partnership between human and machine. These devices were the precursors to the cognitive offloading revolution. They were the first step on the path to making calculation something that happens for us, rather than by us.

The mid-20th century then brought the electric calculator. With the press of a button, complex calculations were completed instantaneously. The mental effort of arithmetic vanished for most people. This had a profound, and often debated, impact on our relationship with numbers. Did it make us lazier? Or did it free our cognitive resources for higher-order problem-solving and conceptual understanding? It likely did both. The calculator, and later the computer, changed the goal of numeracy from being able to compute to being able to interpret.

The Pixelated Present: Counting in the Ambient Data Sphere

Today, we live in the final stage (so far) of this long journey. We have arrived at pixel-based numeracy. Our counting is done for us, silently and perpetually, by the devices that surround us.

Your smartphone is the ultimate ambient quantification device. It counts your steps, your heart rate, your hours of sleep, and your screen time. It tracks the number of calories you consume and the miles you travel. Algorithms count your “likes,” curate your news feed based on engagement metrics, and price goods based on demand calculations in real-time. This is passive data aggregation on a scale the Neolithic herder could never have imagined.

We don’t even need to ask for the count most of the time. We are presented with it. A notification tells us we’ve only reached 60% of our daily step goal. An app informs us we’ve spent 5 hours on social media this week. Our car’s dashboard displays real-time fuel efficiency calculations. This is seamless numerical integration—data woven into the very interface of our lives.

This represents a complete inversion of our starting point. We began by actively, physically externalizing our counts onto pebbles and bones. Now, counts are generated automatically by our environment and internalized into our decision-making. The cognitive ergonomics of counting have been utterly transformed. The effort has been removed, but so has a layer of conscious engagement. We trade awareness for convenience.

The Future: From Counting Things to Understanding What Counts

So, where does this journey from pebbles to pixels leave us? Has the evolution of numerical notation made us more numerate, or has it simply made numbers more ubiquitous?

The challenge is no longer how to count, but what to count, and how to interpret the endless stream of quantitative information thrown at us. The modern form of practical numeracy is data literacy. It’s the ability to question a statistic, to understand a graph, to recognize a biased sample, and to see the humanity behind the metrics.

Perhaps the next evolution is not in the tools themselves, but in our relationship with them. Maybe we will see a swing back towards tangible numeracy interfaces—physical objects that represent data, helping us to feel and understand information in a way a screen cannot. We are already seeing hints of this in educational tools for children.

The journey of everyday counting is the story of humanity learning to extend its mind. We invented pebbles to remember, numerals to abstract, paper to standardize, machines to compute, and pixels to immerse ourselves in data. Each step made counting easier but additionally changed us, reshaping our thoughts, our societies, and our perception of truth.

The next time you absentmindedly check the step on your smartphone, remember the long path we’ve traveled. Remember the herder with their pebbles, the student with their abacus, and the clerk with their ledger. That simple quantity for your screen is the endpoint of a twenty-thousand-year human collaboration—a silent, cumulative masterpiece of everyday concept, dedicated to answering the maximum primary and profound of questions: “How many?”

+ There are no comments

Add yours